The velocity of innovation in the AI coding agent space is incredible—especially in TypeScript. Tons of different AI coding agents are being developed across the ecosystem: everything from local CLI agents (like Claude Code and Gemini-CLI), IDEs (like Cursor), and extensions (like Cline) to SaaS agents (like v0 and Bolt) and now open-source coding evaluation harnesses (like the recently released Next.js Evals). These diverse and fast-paced developments, while exciting, are surfacing a fundamental, recurring problem for developers: reinventing the wheel to solve the same infrastructure challenges.

This post introduces my very new, early-stage project, AI Code Agents, a TypeScript SDK built on top of the AI SDK, specifically to address this technical challenge and foster faster, more efficient iteration across the ecosystem.

The Problem: Fragmentation and Vendor Lock-in

Why do we need a TypeScript SDK for coding agents? Let’s take a closer look at the current status quo. When building an AI agent that explains, reasons about, generates, or executes code, there are several layers of infrastructure to solve, and current approaches are often tightly coupled and inflexible.

A Lack of Modularity Slows Us Down

In most current coding agent projects, the entire stack—from the LLM communication layer to the tool implementations and the execution environment—is custom-built and tightly bound to a specific provider.

- Model Provider Lock-in: Many agents are built around a single provider, making it difficult to switch to a different model (say, trying Anthropic’s latest model instead of an OpenAI one) without extensive refactoring.

- Tool Constraints: The implementation of the agent’s callable functions (its “tools”) is frequently built only to satisfy the tool-calling constraints of the single LLM provider.

- Proprietary Execution Silos: Tools are typically tied to a single, proprietary code execution environment (e.g. something like E2B or Vercel Sandbox). If that environment doesn’t fit your needs, you’re forced to rewrite the tools themselves.

This leads to a harsh reality: whenever you attempt to switch any of these components, you face reimplementing a substantial part of your agent’s logic. This is wasted effort and a key area ripe for abstraction.

The Solution: Environment and Tool Abstraction

Fortunately, a foundation for solving the LLM communication piece already exists. The AI SDK by Vercel provides a unified API and a proposed standard for communicating with diverse LLM providers.

The AI Code Agents SDK is built directly on top of the AI SDK, with a clear and focused objective: to eliminate vendor lock-in by providing a flexible, type-safe framework built on core environment and tool abstractions.

Key Architectural Concepts

The project introduces two core concepts to achieve this flexibility:

1. Environment Abstraction

Environments are the sandboxed execution contexts for agents. The SDK provides an interface layer that allows the same tools to run on different environments. This means you write your tools once, and run them anywhere.

Currently, the SDK supports:

dockerfor containerized environments.node-filesystemfor Node.js filesystem operations.unsafe-localfor local development (use with caution!).mock-filesystemfor testing with no risk of actual filesystem modifications.

Planned support for external sandboxes like E2B and Vercel Sandbox will further cement the “run anywhere” promise.

2. Flexible Tool System

The AI Code Agents SDK includes a comprehensive set of built-in file system and command tools, all with strict TypeScript typing and Zod schemas for validation. This guarantees the agent’s outputs are structurally correct and safe.

Tools, such as read_file, write_file, and run_command, are decoupled from the underlying environment implementation, expecting an instance of any environment as constructor parameter.

While naturally it’s possible to set up individual tools, the system also introduces “safety levels” to set up groups of tools in bulk:

readonly: Only safe read operations.basic: Allows read and write operations, but prevents deletion or shell commands.all: Includes riskier tools, such asdelete_fileandrun_command.

Keep in mind that “riskier” should be taken with a grain of salt. Every tool that isn’t readonly carries a certain sense of risk when an LLM can call it. This is why it’s strongly advised to only run coding agents in sandboxed environments.

You can even define a custom array of tools, or configure specific tools (like write_file) to require user approval via a needsApproval flag.

A Practical Example of Decoupling

The true power of this architecture is realized when you can configure an agent to run with different provider models and different execution environments using the same core logic.

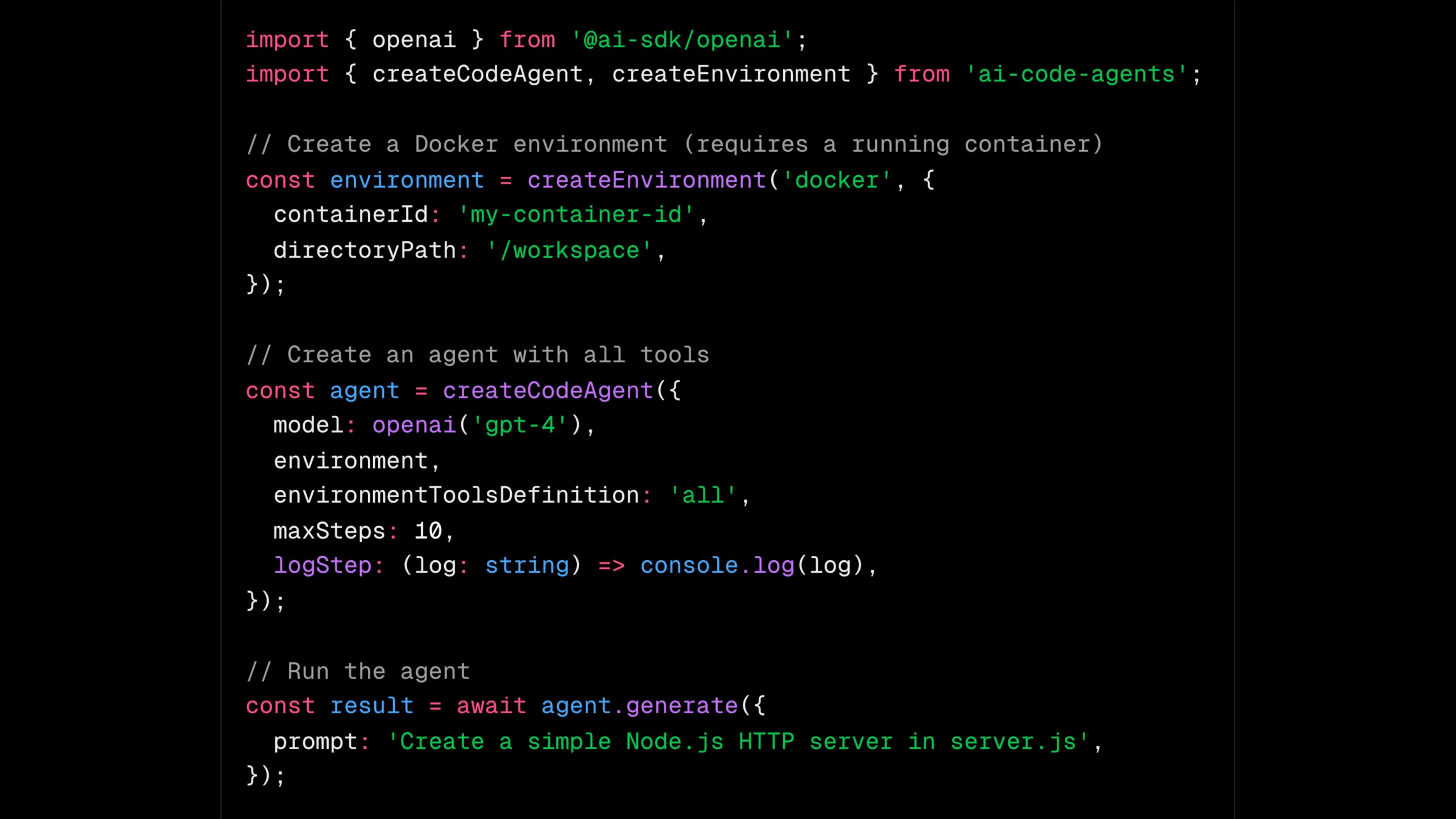

Here is a basic code example for what a coding agent working on a WordPress plugin could look like:

import { createCodeAgent, createEnvironment } from 'ai-code-agents';

// Create a Docker environment (requires a running container)

const environment = createEnvironment('docker', {

containerId: 'my-container-id',

directoryPath: 'wp-content/plugins/my-ai-plugin',

});

// Create an agent with all tools

const agent = createCodeAgent({

model: 'anthropic/claude-sonnet-4.5',

environment,

environmentToolsDefinition: 'all',

maxSteps: 10,

logStep: (log: string) => console.log(log),

});

// Run the agent

const result = await agent.generate({

prompt: 'Add a WordPress settings page for configure AI provider API credentials',

});

console.log(result.text);Code language: TypeScript (typescript)If you wanted to test this agent with another model, it would be as simple as exchanging the model, thanks to the AI SDK. And if you wanted to run this on your proprietary Kubernetes sandbox environment, it would be as simple as exchanging the environment, thanks to the AI Code Agents SDK.

By abstracting the environment and adhering to the Vercel AI SDK standard, we’ve enabled this agent to be model-agnostic and easily transition to a secured docker or vercel-sandbox environment with a single configuration change.

A Call to Collaborate

By reducing the amount of “reinventing the wheel” required to set up a robust coding agent, we can help the entire TypeScript ecosystem iterate faster. The time saved on infrastructure can be channeled into improving the core reasoning, planning, and evaluation logic that truly defines a superior AI agent.

I encourage any developers interested in the future of modular AI agents to explore the AI Code Agents GitHub repository. Keep in mind the project is in its very early stages—early feedback at this point is crucial to shape the direction of the project. Contributions are welcome and much appreciated. This is a community effort; let’s improve how we build coding agents, together.

Leave a Reply