In my initial post that announced the AI Services plugin for WordPress I mentioned several times how it simplifies using AI in WordPress by providing AI service abstractions as central infrastructure.

In this post, let’s take a more hands-on look how you as a developer can use the AI Services plugin: We will write a WordPress plugin that generates alternative text for images in the block editor – a crucial aspect of good accessibility, which AI can be quite helpful with. Since the feature will be built on top of the AI Services plugin, it will work with Anthropic, Google, OpenAI – or any other AI service that you may want to use. And the entire plugin will consist of less than 200 lines of code – most of which will in fact be for the plugin’s UI.

Before we get started, let’s take a brief look at what the finished plugin will look like. The video below gives a quick walkthrough: With a newly added button in the image block toolbar, we can generate an alternative text for the image. In the video, I first demonstrate the functionality using OpenAI’s ChatGPT models, and afterwards I repeat the same step using Google’s Gemini models.

Notice how after generating the alt text for the first time, I switch to the other tab with the AI Services settings screen. Over there I paste my Gemini API key and save, so that upon a refresh of the post in the block editor Gemini will be used instead of ChatGPT. This is confirmed by briefly opening the chatbot, where it shows that “Google (Gemini)” is being used, while initially it was showing “OpenAI (ChatGPT)”.

But now, let’s dive right in.

Prerequisites

All you need for this tutorial is a WordPress site you can use for testing, a code editor, and Node.js installed on your system so that you can access npm.

Please install and activate the AI Services plugin on the WordPress site, as our plugin will use it as a dependency. You’ll also need to configure at least one of the built-in AI services (Anthropic, Google, OpenAI) via Settings > AI Services in the WP Admin menu.

After that, we can get started with writing the plugin. For the remainder of this article, let’s assume our plugin is called “Add Image Alt Text”, and its slug is “add-image-alt-text”. Therefore, please create a new folder add-image-alt-text in your WordPress site’s wp-content/plugins directory.

Scaffolding the plugin structure

Since the plugin integrates with the block editor and since the AI infrastructure comes from the AI Services plugin, it will be mostly written in JavaScript. We will need a single JavaScript file for the editor integration, and then a PHP file that acts as the plugin main file and has the sole purpose of conditionally enqueuing the JavaScript file.

To set up the JavaScript build tooling, we’ll use the @wordpress/scripts package, which makes this a zero-config operation. We only need to create a package.json file to manage the dependency.

The JavaScript file will be placed in a src directory, so that we can rely on the default configuration of the @wordpress/scripts package. This also means the asset will be built in a build directory.

Here’s the overall plugin file structure:

📁 add-image-alt-text

📁 src

❇️ index.js

❇️ add-image-alt-text.php

❇️ package.jsonCode language: CSS (css)For the package.json file, the minimal structure needed is the following:

{

"devDependencies": {

"@wordpress/scripts": "^30.3.0"

},

"scripts": {

"build": "wp-scripts build",

"format": "wp-scripts format",

"lint-js": "wp-scripts lint-js"

}

}Code language: JSON / JSON with Comments (json)This way, the @wordpress/scripts dependency will be included, and we’ve also configured a few relevant scripts using it for our convenience.

Let’s also scaffold the other files src/index.js and add-image-alt-text.php, for now simply as empty files.

Implementing the block editor UI foundation

Let’s recap what we want to implement for the block editor: We’d like for the core/image block to provide an additional button in its block toolbar that we can use to generate alternative text for the image.

A special shoutout and thanks to my friend and colleague Pascal Birchler, who came up with most of the block editor UI implementation that I’m using in this tutorial, which he used for his client-side AI experiments project.

In order to implement such UI control that appears in the block toolbar, we’ll need to write a custom React component that uses the BlockControls component to inject our custom UI into the block toolbar. We’ll then need to use the JavaScript filter editor.BlockEdit, to augment the default editing component for the core/image block with our custom component that we’ll be writing.

Let’s get started by scaffolding an empty ImageControls component and implementing the filter. Since the filter runs for every block in the content, we need to make sure to only inject out custom component for the core/image block specifically.

import { createHigherOrderComponent } from '@wordpress/compose';

import { addFilter } from '@wordpress/hooks';

function ImageControls( { attributes, setAttributes } ) {

// TODO: Replace this with the actual component implementation.

return null;

}

const addAiControls = createHigherOrderComponent(

( BlockEdit ) => ( props ) => {

if ( props.name === 'core/image' ) {

return (

<>

<BlockEdit { ...props } />

<ImageControls { ...props } />

</>

);

}

return <BlockEdit { ...props } />;

},

'withAiControls'

);

addFilter(

'editor.BlockEdit',

'add-image-alt-text/add-ai-controls',

addAiControls

);Code language: JavaScript (javascript)By wrapping the original BlockEdit component in a higher order component, we can wrap it as needed: For the core/image block, we combine it with our custom ImageControls component – for any other block, we simply render it as is. Since we’re passing the block props to the ImageControls component, we’ll be able to make use of these props in our custom component as well (e.g. the block’s attributes and the block’s setAttributes function, which you can already see referenced in the ImageControls component declaration.

Our custom ImageControls component

As mentioned earlier, we’ll need to use the BlockControls component inside of our ImageControls component in order to inject custom UI elements into the block toolbar. For the actual UI element to inject, we can use the built-in ToolbarButton component, which we simply need to wrap with the BlockControls component so that the button appears in the right place.

Additionally, we’ll need another tiny extra component, simply for the icon that we would like to use for our toolbar button. A custom SVG icon would probably be best here, but for simplicity sake we’ll go with the format-image icon from the WordPress Dashicons, which we can render using the built-in Icon component.

Let’s update our ImageControls component with the UI foundation.

import { createHigherOrderComponent } from '@wordpress/compose';

import { Icon, ToolbarButton } from '@wordpress/components';

import { useState } from '@wordpress/element';

import { addFilter } from '@wordpress/hooks';

import { BlockControls } from '@wordpress/block-editor';

import { __ } from '@wordpress/i18n';

function ImageIcon() {

return <Icon icon="format-image" />;

}

function ImageControls( { attributes, setAttributes } ) {

const [ inProgress, setInProgress ] = useState( false );

// If no image is assigned yet, bail early since we can't generate alternative text for nothing.

if ( ! attributes.url ) {

return null;

}

const generateAltText = async () => {

setInProgress( true );

// TODO: Logic to actually generate the image's alternative text.

setInProgress( false );

};

return (

<BlockControls group="inline">

<ToolbarButton

label={ __(

'Write alternative text',

'add-image-alt-text'

) }

icon={ ImageIcon }

showTooltip={ true }

disabled={ inProgress }

onClick={ generateAltText }

/>

</BlockControls>

);

}

// Remaining scaffolding (see previous code snippet).Code language: JavaScript (javascript)Notice the inProgress state variable. While so far this doesn’t really serve a purpose yet, it’ll be used to temporarily disable our toolbar button while the AI model is generating an alternative text, to provide the user some visual feedback about the ongoing process in response to them clicking the button.

With the current code, the custom toolbar button should already appear. It won’t do anything (other than for a split second become disabled due to the inProgress state), but it should be there.

Let’s validate that. Before diving into the functionality, let’s build the current JavaScript code and scaffold the remainder of the plugin so that we can test it in its current state.

Running an initial JavaScript build

Building the JavaScript is straightforward, thanks to the usage of the @wordpress/scripts package. Simply run the following:

npm run buildCode language: Bash (bash)After running the command, you should find the build files build/index.js and build/index.asset.php. The first is the built version of our JavaScript code, while the second provides metadata about that JavaScript file which we can use to register it in PHP.

Let’s do that next, by scaffolding our plugin main file.

Scaffolding the plugin main file

As outlined before, we’ll use the slug add-image-alt-text for our plugin, with the add-image-alt-text.php file as the plugin main file. All that this file needs to do is conditionally load (“enqueue”) the JavaScript asset that we started work on in the block editor.

In other words, this file needs to contain two things:

- The plugin header, providing required metadata about the plugin.

- An action callback hooked into the

enqueue_block_editor_assetsaction, which conditionally enqueues our script.

For the plugin header, we can simply specify the data for the required fields as usual. One additional aspect to include though is the “Requires Plugins” field, through which we will declare the AI Services plugin as a plugin dependency, by referencing its plugin slug ai-services.

For the action callback, we need to use the wp_enqueue_script() function to enqueue our built JavaScript file from its location in build/index.js. To automate the values for the $dependencies and $version parameters of wp_enqueue_script(), we can read the build/index.asset.php file that is also generated as part of the build process. By doing so, we don’t have to manually provide values for those parameters, which would be quite tedious and error-prone. The only caveat here is that only WordPress Core’s built-in dependencies are automatically recognized. We could add support to our tooling to also recognize dependencies from the AI Services plugin accordingly, but for simplicity sake let’s just hard-code the dependency here, which is the ais-ai-store JavaScript asset. This will be needed later to have access to the AI Services plugin’s JavaScript API.

As an additional safeguard, we should only enqueue the JavaScript file if we know that the AI Services plugin is in fact active, since otherwise the JavaScript functionality wouldn’t work. This check is even more so important because WordPress Core only started supporting plugin dependencies with version 6.5, so users on older versions wouldn’t necessarily have the AI Services plugin active when activating the plugin we’re writing here. The easiest and recommended way to do so is to check for the existence of the ai_services() function. If it doesn’t exist, the AI Services plugin is not active, so we shouldn’t enqueue the JavaScript file.

Here is the full code needed for our plugin main file:

<?php

/**

* Plugin Name: Add Image Alt Text

* Plugin URI: https://felix-arntz.me/blog/writing-a-wordpress-plugin-to-generate-image-alt-text-with-ai-services/

* Description: Example plugin using AI Services to implement an image control to generate image alt text.

* Requires at least: 6.0

* Requires PHP: 7.2

* Version: 1.0.0

* Author: Felix Arntz

* Author URI: https://felix-arntz.me

* License: GPLv2 or later

* License URI: https://www.gnu.org/licenses/old-licenses/gpl-2.0.html

* Text Domain: add-image-alt-text

* Requires Plugins: ai-services

*/

add_action(

'enqueue_block_editor_assets',

static function () {

// Bail if the AI Services plugin is not active.

if ( ! function_exists( 'ai_services' ) ) {

return;

}

// Read asset metadata file and manually add AI Services dependency script.

$asset_metadata = require plugin_dir_path( __FILE__ ) . 'build/index.asset.php';

$asset_metadata['dependencies'][] = 'ais-ai-store';

wp_enqueue_script(

'add-image-alt-text',

plugin_dir_url( __FILE__ ) . 'build/index.js',

$asset_metadata['dependencies'],

$asset_metadata['version'],

// Load the script with `defer` attribute so that it doesn't block rendering of the editor.

array( 'strategy' => 'defer' )

);

}

);

Code language: PHP (php)And that’s all we need for the PHP portion of the plugin. Since the plugin main file effectively only serves the purpose to enqueue the JavaScript file, we’ll probably rarely have to modify it. All the heavy lifting happens on the JavaScript side.

At this point, you could give the plugin a try already. With the PHP main file and the built JavaScript asset in place, you should already see the new toolbar button to generate alternative text in the block toolbar of the core/image block. To verify, simply add an image block to a block editor post. Of course, clicking the toolbar button won’t do anything yet since we haven’t implemented that portion yet. Let’s do that next.

Implementing the AI logic to generate the image alternative text

Let’s move on to arguably the coolest part: Implement the actual AI logic to generate alternative text for the image in the current core/image block.

As outlined in the AI Services plugin’s JavaScript documentation, the first step to any AI interactions in JavaScript is to access the available AI service, using the getAvailableService() selector on the plugin’s AI store. When loaded, the store is accessible on WordPress Core’s built-in store registry, e.g. via wp.data.select( 'ai-services/ai' ). You can alternatively reference wp.data.select( aiServices.ai.store ), which is a bit safer, to avoid hard-coding the store name.

By using getAvailableService(), we retrieve whichever AI service the administrator of the site has configured, whether that’s Anthropic, Google, OpenAI, or another one. A crucial aspect here is to provide the list of capabilities we need for our functionality, so that only services that support all of them are considered. Since we need to generate text, we need the “text_generation” capability, which is quite basic and most AI services support in some capacity. However, as we want to use a service that can describe an image, we also need “multimodal_input”, so that we can pass data other than just text to the model. We can access constants for these values on aiServices.ai.helpers.AiCapability so that we don’t have to hard-code them.

Let’s expand our ImageControls component from above accordingly. It’s worth clarifying that we need to retrieve the service right away in the component body, not just inside the generateAltText() callback. That’s partially because the usage of React hooks like useSelect() requires it, but it also makes sense, because it allows us to bail early in case no suitable AI service is configured on the site. It would be a poor experience otherwise if users would still see the button, but then found out that it doesn’t work once they click it.

Here’s the relevant portion of the updated code:

import { createHigherOrderComponent } from '@wordpress/compose';

import { Icon, ToolbarButton } from '@wordpress/components';

import { useSelect } from '@wordpress/data';

import { useState } from '@wordpress/element';

import { addFilter } from '@wordpress/hooks';

import { BlockControls } from '@wordpress/block-editor';

import { __ } from '@wordpress/i18n';

const { enums, store: aiStore } = window.aiServices.ai;

// Additional functions (see previous code snippet).

// Define this as a constant outside the component, so that it's not a new array for every re-render.

const AI_CAPABILITIES = [ enums.AiCapability.MULTIMODAL_INPUT, enums.AiCapability.TEXT_GENERATION ];

function ImageControls( { attributes, setAttributes } ) {

const [ inProgress, setInProgress ] = useState( false );

// Retrieve AI service with the required capabilities.

const service = useSelect( ( select ) =>

select( aiStore ).getAvailableService( AI_CAPABILITIES )

);

if ( ! service ) {

return null;

}

// If no image is assigned yet, bail early since we can't generate alternative text for nothing.

if ( ! attributes.url ) {

return null;

}

const generateAltText = async () => {

setInProgress( true );

// TODO: Logic to actually generate the image's alternative text.

setInProgress( false );

};

// Remainder of the component code (see previous code snippet).

}

// Remaining scaffolding (see previous code snippet).Code language: JavaScript (javascript)With this in place, we can now finally implement the logic to generate the image’s alternative text inside of the generateAltText() callback, using the service returned by the getAvailableService() selector.

To generate the alternative text, we need to use the service.generateText() method. While for a simple text prompt we could simply pass a string to that method, we need something slightly more complex here: As mentioned before, in order to pass both a text prompt plus the image itself to the AI model, we’ll need to pass it as a multimodal prompt (see the linked documentation for the syntax required to do so).

To send the image to the AI service API, the easiest way is to send it inline, which means we’ll need the base64-encoded version of the image. Additionally, the MIME type of the image must be provided (e.g. image/jpeg, image/png, image/webp, etc.). To get this data, we can define two helper functions getBase64Image( url ) and getMimeType( url ), both of which would retrieve the image URL and return the relevant data. We’re not going to cover the implementation of these two helper functions in this tutorial, but for reference you’ll find their code included below. You can simply paste them into the plugin’s JavaScript file.

function getMimeType( url ) {

const extension = url.split( '.' ).pop().toLowerCase();

switch ( extension ) {

case 'png':

return 'image/png';

case 'gif':

return 'image/gif';

case 'avif':

return 'image/avif';

case 'webp':

return 'image/webp';

case 'jpg':

case 'jpeg':

default:

return 'image/jpeg';

}

}

async function getBase64Image( url ) {

const data = await fetch( url );

const blob = await data.blob();

return new Promise( ( resolve ) => {

const reader = new FileReader();

reader.readAsDataURL( blob );

reader.onloadend = () => {

const base64data = reader.result;

resolve( base64data );

};

} );

}Code language: JavaScript (javascript)With those two functions in place, we can implement the generateText() call. Since any AI API calls may fail for various reasons, we need to wrap it in a try ... catch clause.

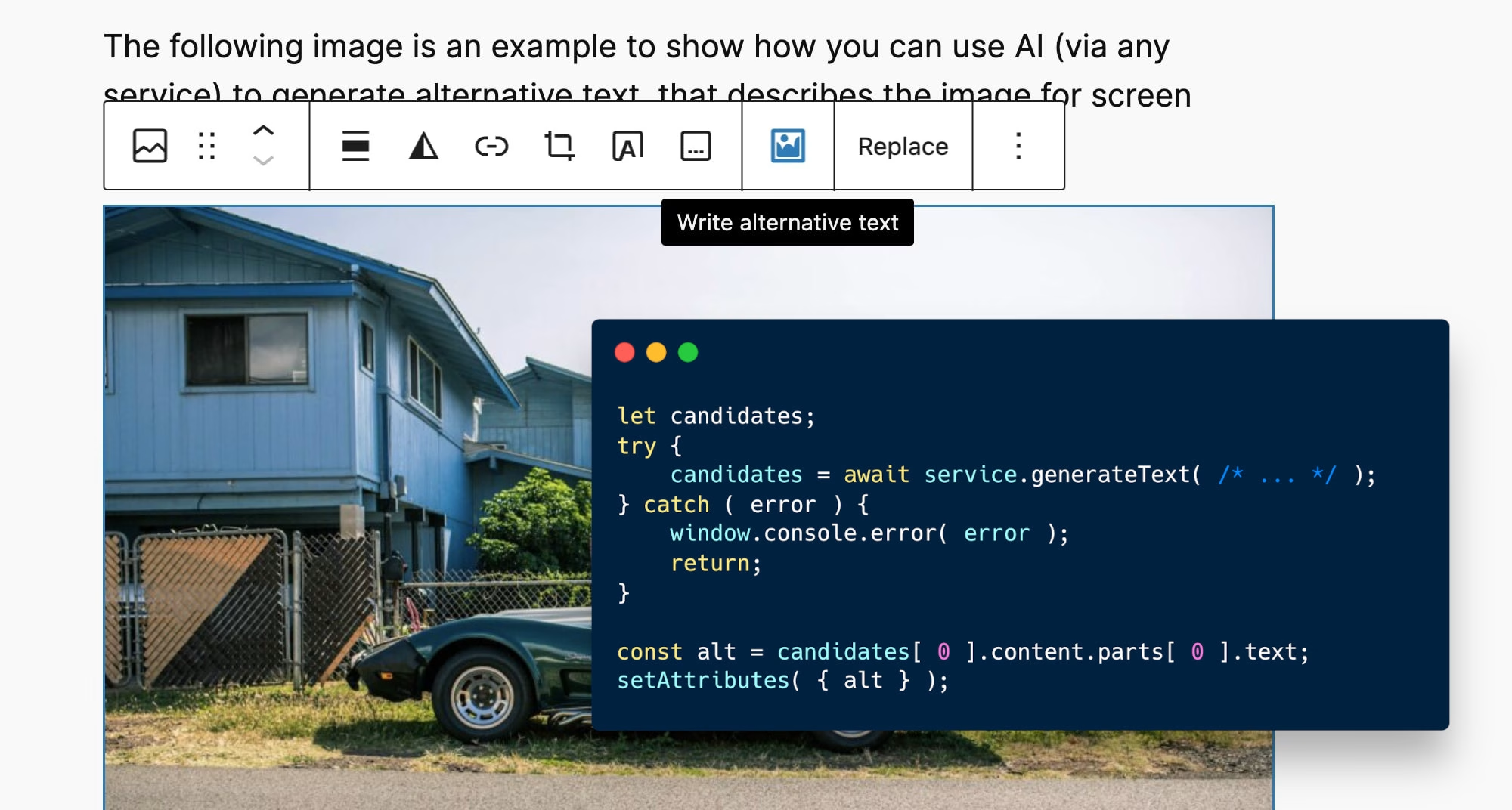

Note also that the generateText() method returns a complex data shape of multiple potential response “candidates”, so it won’t just return a string. This is necessary to cater for more complex data formats like multimodal outputs, or scenarios where you’d like to receive multiple alternative responses. You can learn more about this in the documentation about processing AI service responses.

To get the alternative text from the response candidates, we can use the text from the first content part of the first candidate, assuming that only one candidate is returned (as we didn’t configure the model to provide more than one candidate) and assuming that the response is text-only. In other words, we’ll need to look at candidates[ 0 ].content.parts[ 0 ].text. However, that’s strictly speaking not the most reliable and it’s tedious too. For such scenarios, the AI Services plugin provides helper functions as part of its API that you can access via aiServices.ai.helpers.

Last but not least, since some models may return excessive newlines, it’s a good idea to trim them. Once we have the alternative text string, we can simply set it as the block’s alt attribute by using the setAttributes() function which, as mentioned earlier in this tutorial, the ImageControls component has access to via props.

Let’s complete the implementation of our generateAltText() callback.

const generateAltText = async () => {

setInProgress( true );

const mimeType = getMimeType( attributes.url );

const base64Image = await getBase64Image( attributes.url );

let candidates;

try {

candidates = await service.generateText(

{

role: 'user',

parts: [

{

text: __(

'Create a brief description of what the following image shows, suitable as alternative text for screen readers.',

'add-image-alt-text'

),

},

{

inlineData: {

mimeType,

data: base64Image,

},

},

],

},

{

// Every AI Services call requires a unique feature identifier specified,

// e.g. the plugin slug. Additionally, we'll need to pass the capabilities

// that we need to the method call too so that a sufficiently capable model

// is used.

feature: 'add-image-alt-text',

capabilities: AI_CAPABILITIES,

}

);

} catch ( error ) {

window.console.error( error );

setInProgress( false );

return;

}

const helpers = window.aiServices.ai.helpers;

const alt = helpers

.getTextFromContents( helpers.getCandidateContents( candidates ) )

.replaceAll( '\n\n\n\n', '\n\n' );

setAttributes( { alt } );

setInProgress( false );

};Code language: JavaScript (javascript)Notice the error handling too, for which we’ll simply log a console error with further details. It’s also critical to always set the inProgress state to false again at the end of the process, regardless of whether it was successful or not, so that our toolbar button to generate the alternative text doesn’t remain disabled.

At this point, we have completed the entire plugin implementation, so we can give it a final test. At this point, you may also want to sanity-check your code, so feel free to run npm run lint-js. If it flags any violations, you may want to address those first. For code style / formatting violations specifically, you may want to run npm run format to fix those automatically.

If everything looks good, simply rerun the plugin build via npm run build. You can then load a post in the block editor and add a new image block with an image you’d like to generate alternative text for. Click our new toolbar button from the plugin, wait a few seconds, and the generated alternative text should appear in the image block sidebar. And that’s it – we’ve successfully created a plugin that uses AI to generate alternative text. And the AI portion of that specifically is only two function calls.

Summary

I hope this tutorial has been helpful as hands-on guide for the powerful capabilities that you can implement on top of the AI Services plugin.

You could take this idea a step further, e.g. by building a UI that allows iteration on the alternative text: Let’s say you’re not happy with the generated alternative text, so you would like to regenerate it. That’s already possible with what we’ve built in this tutorial, but you wouldn’t be able to compare the results – the new alternative text would simply replace the old one. Showing a modal with both the alternative texts next to each other may be helpful as a comparison UI, where the user can then choose which generated alternative text they would like to keep.

For reference, you can find the full implementation of this plugin among the examples in the AI Services GitHub repository, in a slightly modified variant (without using a build process). That said, should you want to turn this into a real plugin, the approach outlined in this tutorial is recommended over how I built this for the example. It’s simply because I didn’t want to include a build process for those examples so that the code can be used as-is, but for developer experience having a build process as outlined here is much better.

As mentioned in my initial post that announced the AI Services plugin for WordPress, I am looking for feedback on the plugin’s APIs, and contributions are welcome. If you’re building something on top of the AI Services plugin, I’d love to learn more about it. Please feel free to share feedback or contribute in the AI Services plugin’s GitHub repository.

Leave a Reply